Developing AIGC using DNN through MYIR's Renesas RZ/G2L based Board

2023-12-07

1353

This evaluation report is provided by developer "ALSET" from MYIR’s forum. This article will introduce how to develop AIGC image using Deep Neural Networks (DNN) through MYIR’s Renesas RZ/G2L processor based Development Board MYD-YG2LX.

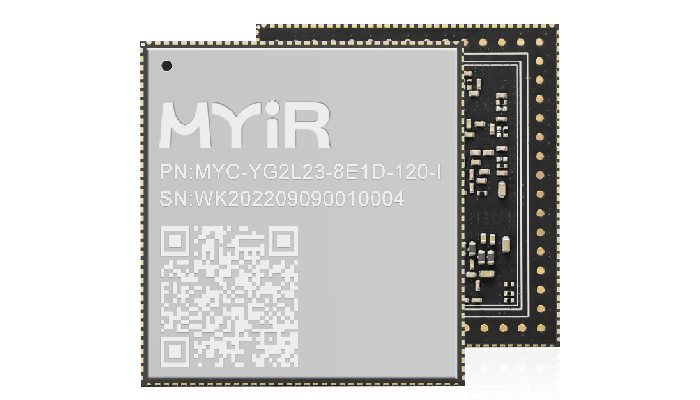

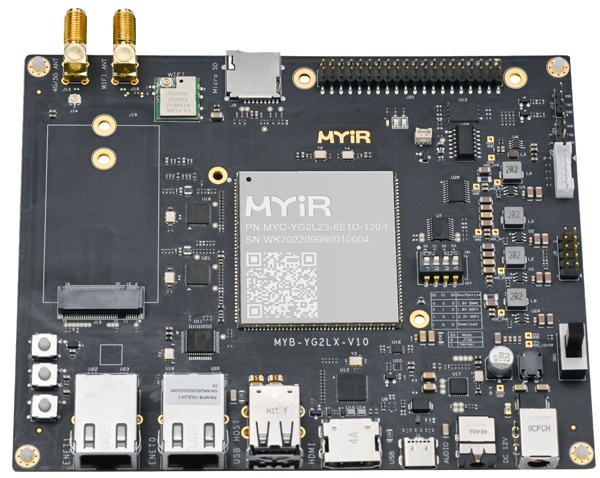

MYD-YG2LX Development Board

1. Project Background

AIGC (Artificial Intelligence Generated Content) is like a neural network model that has been trained with a large number of features to generate creative content that people need for new content or requirements. We used to use human thinking and creativity to complete the work process, and now we can use artificial intelligence technology to replace us. In a narrow sense, AIGC refers to a production method that utilizes AI to automatically generate content, such as automatic writing, automatic design, etc. In a broad sense, AIGC refers to AI technology that uses generative large AI algorithms to assist or replace humans in creating massive, high-quality, and human-like content at a faster pace and lower cost, based on user-provided prompts.

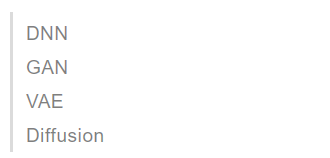

The four main representative models in image generation model are as the following:

Based on deep neural network (DNN), image art style transform is carried out to generate high-quality neural network models with artistic style images. This model extracts content and style features from images through deep neural networks, and then recombines the target image content to generate images with original image content and artistic style. The style transfer not only modifies the patterns, colors, features, etc. of the image, but also preserves the highly recognizable content carrier of the original image.

Generally, AIGC (Artificial Intelligence Generated Content) requires high hardware performance and can only run on high-performance graphics workstations or servers with powerful GPUs. Here we will use MYIR’s MYD-YG2LX development board to perform image style transfer calculations on embedded devices. Additionally, we will combine the graphical interface and USB camera to complete the development of image stylization of any captured photos. This allows users to experience this unique AIGC content generation on embedded devices.

2. Hardware Solution

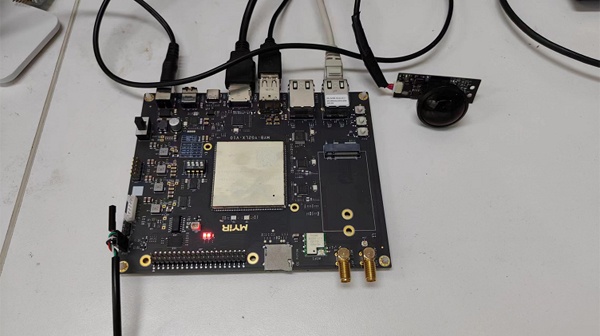

The project utilizes the MYD-YG2L as the main controller board and employs an 8-megapixel 4K-level wide-angle USB camera lens. It captures images and processes them in a stylized manner through the main controller board. The processed images are then displayed via HDMI output.

Here, the development board is connected to an HDMI display and equipped with a 4K high-definition camera lens. The primary hardware connections are as shown in the diagram below:

3. Main Technical Principles

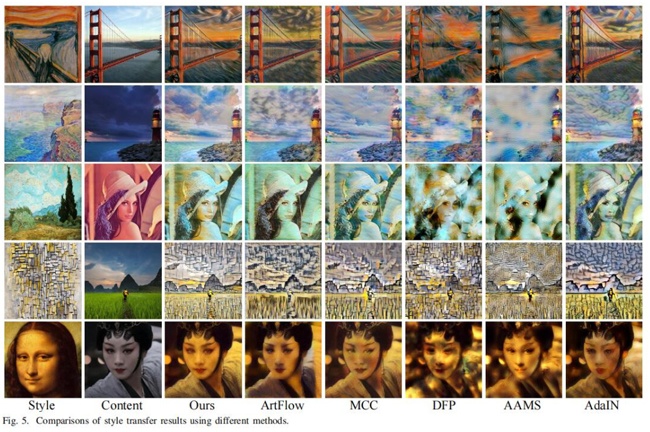

The main process of image style transfer is to input img_content and img_style, and then combine the content body of img_content with img_style to realize the creative creation of an image. This process is also called Style Transform. As shown in the following figure, the first column is the original input image, the second column is the style diagram, and the third column is the output style image under various control parameters:

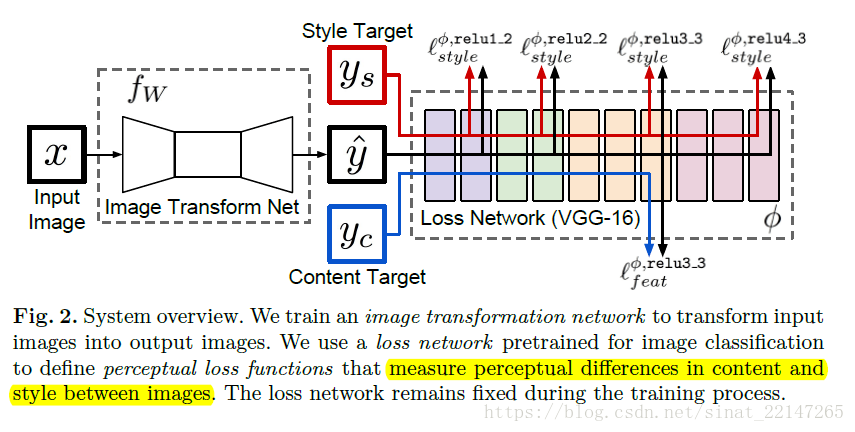

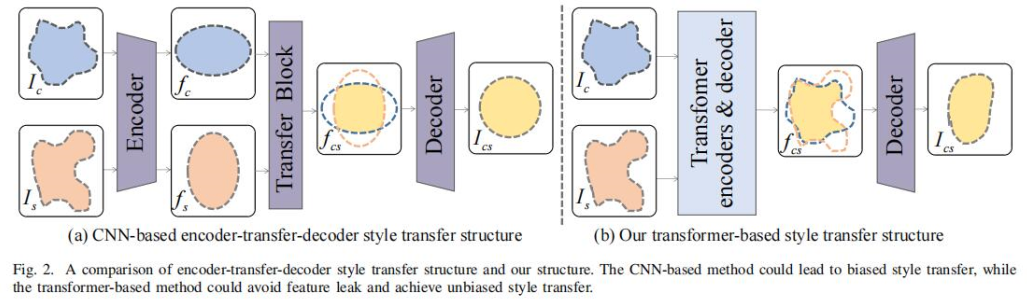

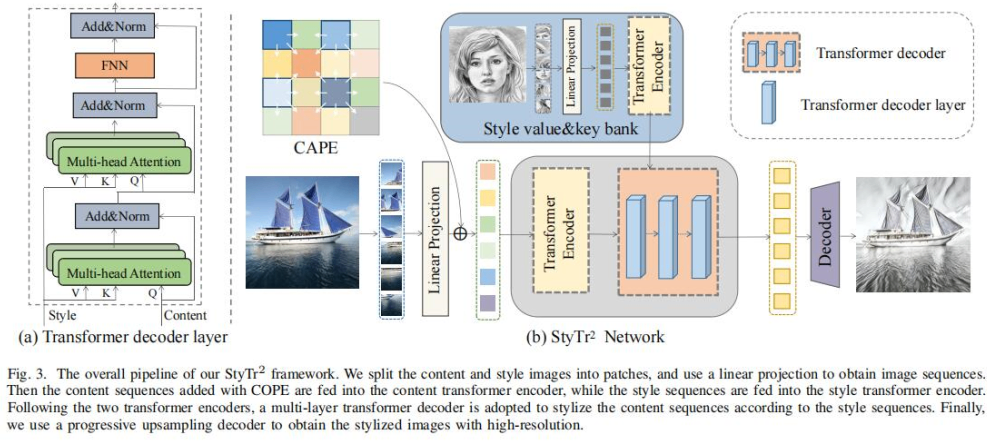

The core algorithm involves integrating the feature vectors encoded through CNN convolution into the first layer of a transformer for the style transfer task. Subsequently, the mixed content is decoded to produce a new image content. Mainly refer to Li Feifei's thesis. The core workflow is illustrated in the following diagram:

After testing MYD-YG2LX, it can be concluded that it supports the OpenCV tool library well and has strong image processing capabilities. So you can use OpenCV's DNN module to implement above algorithm process on the board. The DNN module of OpenCV has been supporting inference since its release, and data training is not supported goal. Therefore, we can use the already trained model to complete the inference process on the board, which is the process of image style transfer generation. Now OpenCV can support the loading of models from TensorFlow, PyTorch/Torch, Caffe, DarkNet, and others, along with the usage of OpenCV's DNN module.

Performing model inference with OpenCV's DNN module is relatively straightforward, and the process can be summarized as follows:

1. Loading model

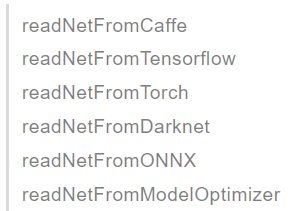

Because the OpenCV DNN module is mainly used for inference calculations, prepare a trained model before use (how to train one's own models with different styles will also be explained later). OpenCV supports most models of all mainstream frameworks. The readNet series functions of OpenCV show the supported framework types:

The style transfer model used here is the open source Torch/Lua model, fast-neural-style. They provide ten models of styles transfer, and the download scripts for the models are available at:

https://github.com/jcjohnson/fast-neural-style/blob/master/models/download_style_transfer_models.sh

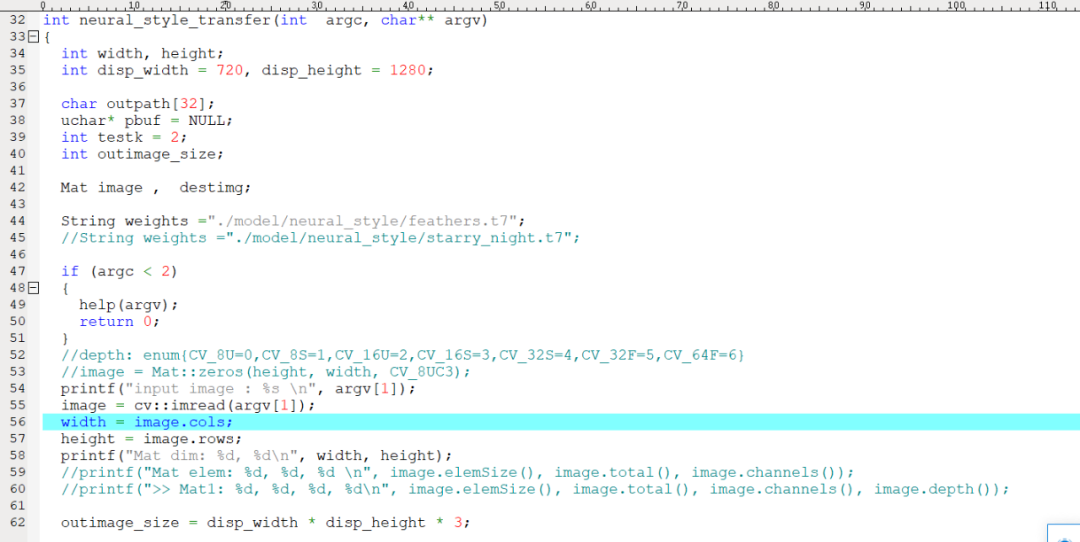

Here, the ReadNetFromTorch function of OpenCV is used to load the PyTorch model.

2. Input image preprocessing

The images input to the model in OpenCV need to be first constructed into a 4-block data block (Blob), and undergo some preprocessing such as re-sizing, normalization, and scaling.

3. Model inference

The model inference process is a forward propagation of the blob input built from the input to the model neural network model, which is done in OpenCV with the following very simple two lines of code:

net.setInput(blob)

output = net.forward()

4. Software System Design

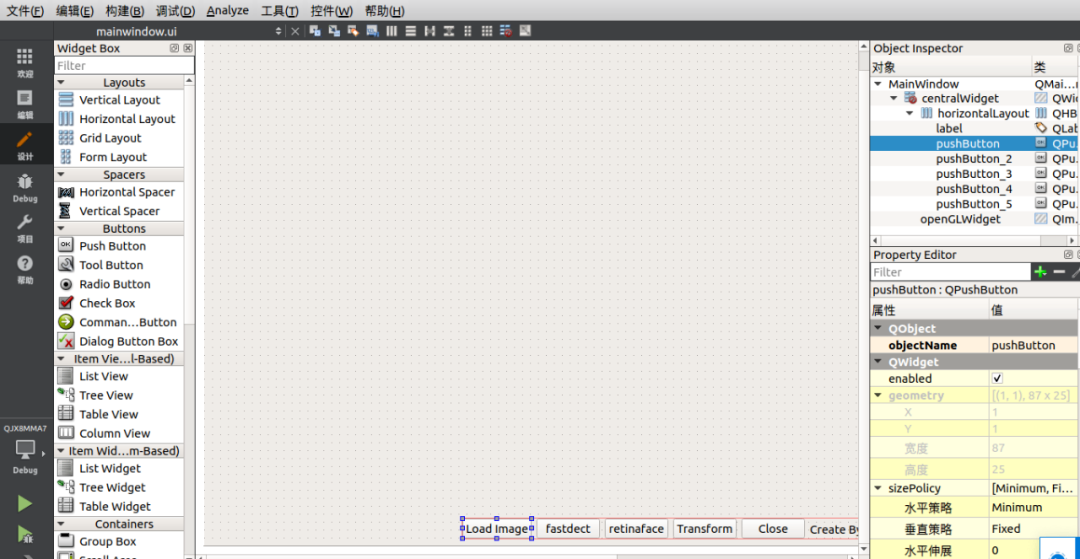

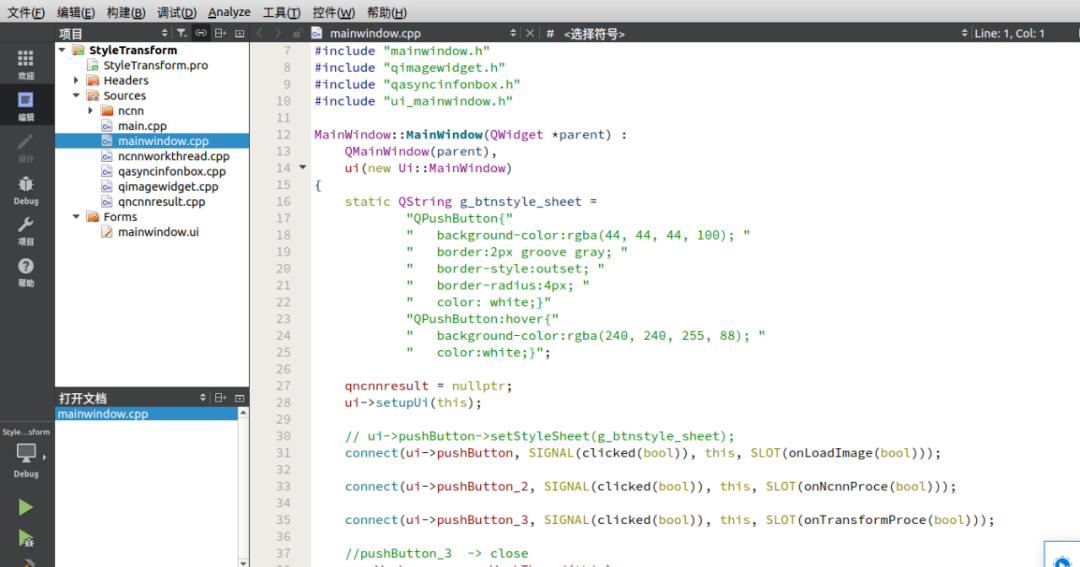

In this software development, we primarily utilize the OpenCV SDK and QT SDK from the MYD-YG2L's SDK. QT is used to create the user interface for file and image style transfer operations, while OpenCV is employed for DNN image inference calculations. The design involves creating a QT user interface that allows users to select files or capture original images from a camera.

Develop UI interaction logic code

Develop an OpenCV DNN neural network call module

Compile in the cross compilation environment of the development board, and deploy the compiled results to the development board. In addition, the training model files used for style transfer were also deployed to the development board. After practical testing, the following models ran normally on the development board, while other models reported errors due to insufficient memory.

1: "udnie",

2: "la_muse",

3: "the_scream",

4: "candy",

5: "mosaic",

6: "feathers",

7: "starry_night"

5. Software operation effect

After deploying the QT software and related model files to the development board, you can run the test results. Start the command to run the QT program on the development board:

./style_transform -platform linuxfb

After running, select an image to display as follows:

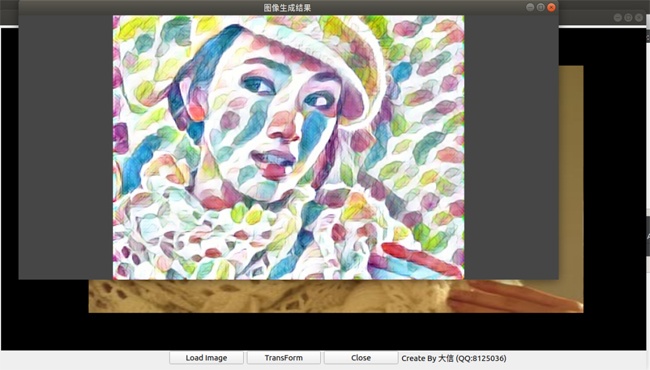

Click the "transform" button and wait for about 13 seconds to obtain the style transfer output screen, as shown below:

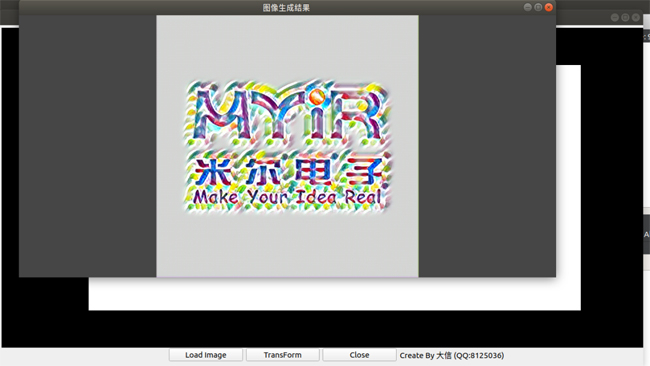

Change to use MYIR’s logo image. The size of this image is small, and the change rate of image content is low. Take a look, and the stylization conversion time is:

The test is still around 13 seconds, and the following output image is obtained:

The style images generated here are using the Feathers model, and the processing time for other models is also similar.

6. Development Postscript

Finally, using the developed image conversion program, compare the MAC version compiled on the MAC computer with the same image converted on the development board. It takes 8.6 seconds to run the image conversion using MacBook Pro 2.2G with 16G RAM. Multiple tests were conducted on the conversion of different models, and the running speed on MYD-YG2LX can basically reach 66% of the performance of MAC computer conversion. This result shows that the DNN inference calculation performance of the development board is relatively strong.

In the future development of this project, when there is more time, we will attempt to train more distinctive styles and integrate GAN generated neural network models into content generation models, attempting various methods to generate richer and more colorful content.

2024-09-23

Ethernet Driver Porting Guide Based on MYIR's NXP i.MX.93 Development Board

Ethernet Driver Porting Guide Based on MYIR's NXP i.MX.93 Development Board MYD-LMX9X

2024-08-16

QT Development Guide for NXP i.MX 93 Development Board by MYIR

1. OverviewQt is a cross-platform graphical application development framework that is applied to devices and platforms of different sizes, while providing different license versions for users to choos

2024-06-16

Application Notes | Setting up OTA Functionality on MYIR's NXP i.MX 93 Development Board

1. OverviewOver-the-Air Technology (OTA) is a technology that enables remote management of mobile terminal equipment and SIM card data via the air interface of mobile communication. In this article, O

2024-06-13

Ubuntu System Porting Guide for Renesas RZ/G2L-based Remi Pi

1. OverviewLinux system platform has a variety of open source system building frameworks, which facilitate the developer in building and customizing embedded systems. Currently, some of the more commo

2024-05-22

Boosting the Power Industry: Notes on Porting the IEC61850 Protocol to the MYD-YF13X

Part 1: OverviewIEC 61850 is an international standard for communication systems in Substation Automation Systems (SAS) and management of Decentralized Energy Resources (DER). Through the implementati

2023-10-31

How to Select MYIR's STM32MP1 based SOMs?

Choosing a suitable processor is a difficult problem that every engineer may face in the early stage of development. How do you choose a processor that fits into product development? Today, we will an

2023-10-18

Bring up the STM32MP135x - ST Training Course based on MYD-YF13X (Ⅲ)

This article will take MYIR’s MYD-YF13X and STM32MP135F-DK as examples, and continue to explain how to use STM32CubeMX combined with the developer package to implement the booting of the minimal syste

2023-09-26

Bring up the STM32MP135x - ST Training Course based on MYD-YF13X (II)

This article will take MYIR’s MYD-YF13X and STM32MP135F-DK as examples, and continue to explain how to use STM32CubeMX combined with the developer package to implement the booting of the minimal syste

2023-09-21

Take Great Advantages of SemiDrive's Super Powerful D9-Pro Processor

MYIR has launched the MYC-JD9360 System-On-Module and MYD-JD9360 development board based on SemiDrive’s D9-Pro processor in August. The D9-Pro is a super powerful MPU with six Arm Cortex-A55 cores (up